NEC develops lightweight LLM with world-class Japanese language proficiency and just 13 billion parameters

6 July 2023, 2:30 pm

Tokyo, July 6, 2023 - NEC Corporation (NEC; TSE: 6701) has developed its own generative AI Large Language Model (LLM) for Japanese. This LLM is a general-purpose model, also known as a foundation model, developed by NEC using multilingual data the company collected and processed independently.

This LLM, which achieves high performance while reducing the number of parameters to 13 billion through unique innovations, not only reduces power consumption, but also enables operation in cloud and on-premises environments due to its light weight and high speed. In terms of performance, the LLM has achieved world-class Japanese language proficiency on a Japanese language benchmark that measures knowledge of the Japanese language and reading comprehension, which corresponds to the model’s reasoning ability.

NEC has already begun using this LLM for internal operations, applying it not only for common operations, but also for improving the efficiency of various tasks, such as document creation and coding support. In recent years, generative AI such as "ChatGPT" has been attracting worldwide attention, and its use is rapidly increasing across a wide range of industries. However, most existing LLMs are trained mainly in English, and there are almost no LLMs that can be customized for use in a variety of industries while possessing high Japanese language ability.

The development of this new LLM by NEC is expected to further accelerate their business use and help improve corporate productivity.

NEC's LLM features

1. High Japanese language proficiency

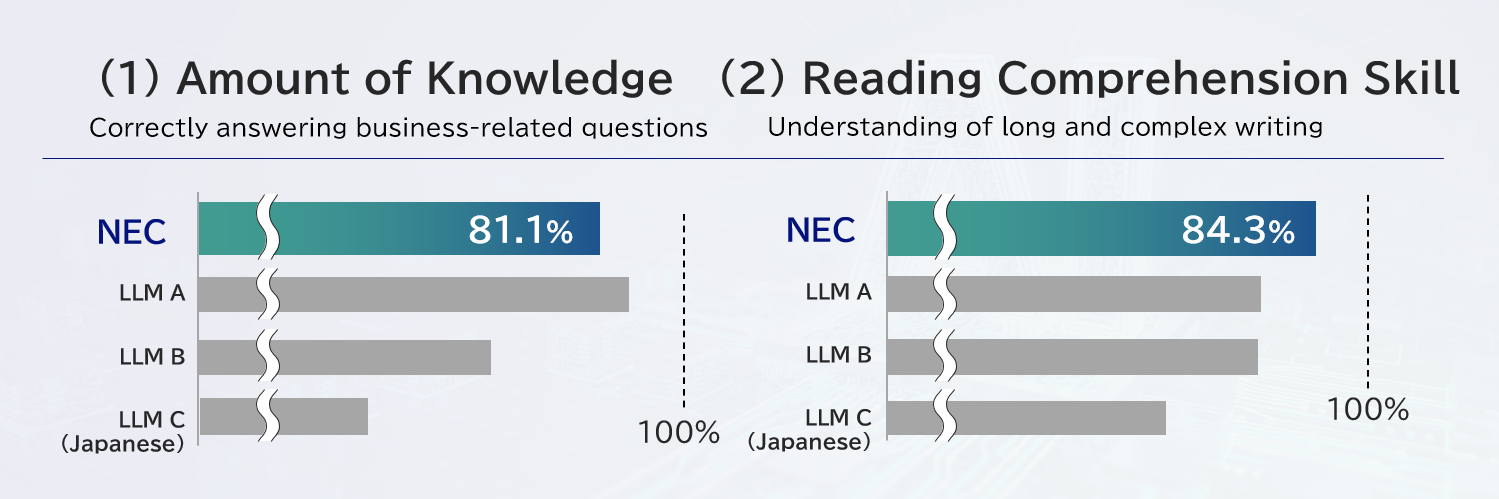

In order to use LLM in actual business, high performance is required in terms of Japanese language knowledge and comprehension. NEC evaluated its LLM using JGLUE, a Japanese language comprehension benchmark that is a standard in the field of natural language processing*, and found that it is currently in the top class with an 82.3% in the ability to answer questions, which corresponds to its amount of Japanese language knowledge, and in document reading comprehension, which corresponds to its reasoning ability, its 82.1.% exceeds the top vendor. As a result, NEC’s LLM is expected to perform well in a variety of industries.

2. Light weight

This LLM has high performance, yet NEC's proprietary technology has reduced the size of the model to a compact 13 billion parameters. While conventional LLMs with high performance require a large number of GPUs, this LLM can be run on a standard server with a single GPU. As a result, business applications that incorporate the LLM can respond well, which helps reduce power consumption and server costs during business operations. In addition, the LLM can be easily built in a short period of time and can be run in a customer's on-premises environment, making it safe even for highly confidential operations.

Evaluation results by JGLUE, a Japanese language comprehension benchmark (NEC research)

Parameter size is often used to measure the performance of an LLM. However, increasing the parameter size leads to a decrease in inference speed, as well as an increase in the number of GPUs and the power consumption required to operate the model. Consequently, if the same performance can be provided, it is desirable to have fewer parameters. In this study, NEC focused on the fact that LLM performance depends not only on parameter size, but also on the amount of high-quality data used for training and training time. As a result, NEC achieved high performance by limiting the parameter size to the range that can be operated with a single GPU, and by using a large amount of both data and calculation time.

NEC independently developed the largest supercomputer for AI research among Japanese companies, and it has been fully operational since March 2023. By utilizing this supercomputer, NEC was able to construct a high-performance 13-billion-parameter LLM in a short period of approximately one month.

Going forward, NEC aims to leverage the foundation model in order to actively promote the development of LLM for individual companies using closed customer data. NEC also plans to improve the performance of the foundation model itself, and to put these technologies to practical use as soon as possible through the NEC Generative AI Hub.

Note:

In our benchmarks, fine-tuning (supervision) was not performed on the training data and only few examples are provided for LLMs in an In-Context Learning setting. A JCommonSenseQA dataset was used for evaluating how knowledgeable the LLMs are on common sense issues, and 3 examples were used for in-context learning.

A JSQuAD dataset was used for evaluating the performance of reading comprehension skill, and 2 examples were used for in-context learning. An Exact Match score is used for evaluation metrics. "LLM C (Japanese)" indicates the best performing model among the Japanese LLMs, whose evaluation scores are either obtained from experiments conducted by NEC or obtained from the original paper.

*******************************

About NEC Corporation

NEC Corporation has established itself as a leader in the integration of IT and network technologies while promoting the brand statement of “Orchestrating a brighter world.” NEC enables businesses and communities to adapt to rapid changes taking place in both society and the market as it provides for the social values of safety, security, fairness and efficiency to promote a more sustainable world where everyone has the chance to reach their full potential. For more information, visit NEC at http://www.nec.com.

LinkedIn: https://www.linkedin.com/company/nec/

YouTube: https://www.youtube.com/user/NECglobalOfficial

Facebook: https://www.facebook.com/nec.global/

NEC is a registered trademark of NEC Corporation. All Rights Reserved. Other product or service marks mentioned herein are the trademarks of their respective owners. ©2023 NEC Corporation.

Media Contact

Liz Ackroyd

Communications Manager

liz.ackroyd@nec.com.au

0405 707 161